Video Instance Segmentation by Instance Flow Assembly

Abstract

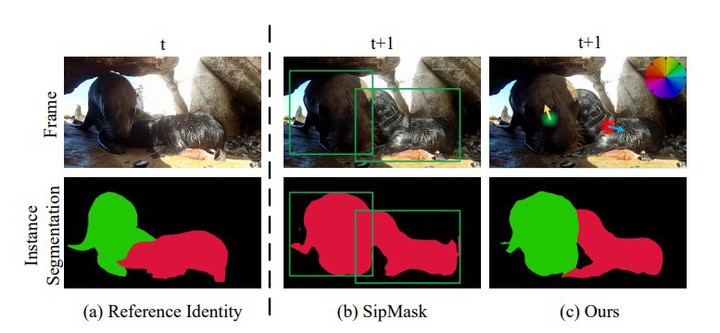

Instance segmentation is a challenging task aiming at classifying and segmenting all object instances of specific classes. While two-stage box-based methods achieve top performances in the image domain, they cannot easily extend their superiority into the video domain. This is because they usually deal with features or images cropped from the detected bounding boxes without alignment, failing to capture pixel-level temporal consistency. We embrace the observation that bottom-up methods dealing with box-free features could offer accurate spacial correlations across frames, which can be fully utilized for object and pixel level tracking. We first propose our bottom-up framework equipped with a temporal context fusion module to better encode inter-frame correlations. Intra-frame cues for semantic segmentation and object localization are simultaneously extracted and reconstructed by corresponding decoders after a shared backbone. For efficient and robust tracking among instances, we introduce an instance-level correspondence across adjacent frames, which is represented by a center-to-center flow, termed as instance flow, to assemble messy dense temporal correspondences. Experiments demonstrate that the proposed method outperforms the state-of-the-art online methods (taking image-level input) on the challenging Youtube-VIS dataset.